VR And Human Vision

Oliver Kreylos, a CS professor from UC Davis is doing a series of blog pieces on how virtual reality works. His blog post from Jan 2016 talks about Lenses in HMDs. People get virtual reality and 3D TVs confused a lot. They're definitely not the same thing and one of the biggest differentiators are the lenses in the HMD.

Dr. Kreylos makes some really good points that raise questions on my end. For example:

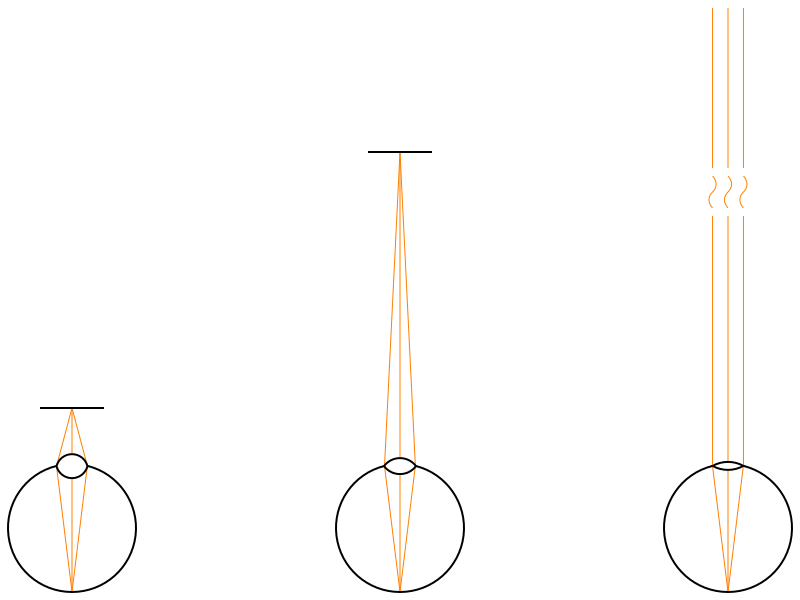

"The central observation is that the amount by which light rays from an object diverge at the eye’s lens depends on the distance from the object to the eye. If the object is close, the rays diverge at a large angle (see Figure 1, left); if the object is at a medium distance, the rays diverge less (see Figure 1, center); if the object is infinitely far away, the rays are parallel and do not diverge at all. In other words: the closer an object is to the eye, the more the eye’s lens has to bend the incoming light rays to form a sharp image on the retina."

Does this mean that the lens remains bent at a larger angle no matter what we look at on an hmd? Will the perception of looking at something far away in VR bend the lens slightly? In other words how much does perception compete with reality when it comes to how our eyes react?

The screenshot below depict how lens accommodates for objects at a short, medium and infinite range.

The human lens bends light varying on the distance of an object using the ciliary muscles so that the retina can send the most focused and best image up the visual system.

The human lens bends light varying on the distance of an object using the ciliary muscles so that the retina can send the most focused and best image up the visual system.

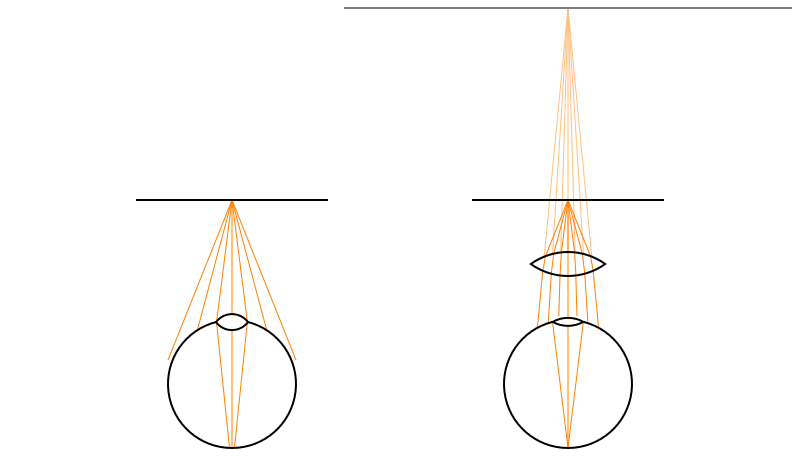

The screenshot below shows how the hmd's lens allows for the eye to be able to see objects from a screen right up to the user's face.

Looking for at really close objects for a long time can cause eye strain and Dr. Kreylos addresses how HMDS get around this issue:

If we extend the light rays between the intermediate lens and the eye backwards (the fainter lines on the right of Figure 2), we find that they all intersect in a single point behind the real screen. To the eye, it looks exactly like a screen that is farther away and larger (the grey horizontal line on the right of Figure 2). This is called a virtual image. And that’s why viewers can comfortably focus on the screens in HMDs: they are not looking at the real screens, which are too close, but at virtual images of the real screens, which are at a much larger distance.

What is the mechanism my which distance of the virtual image in the hmd is determined? How do you they know what the best distance is?

Inevitably this leads to questions about the long term impacts of looking at fixed virtual images. The dk2's screen virtual screen at 4.5 ft( Dk1 was set at infinite). Are there any studies regarding this? How would double layer HMDs change the dynamic?

There is much to be learned in the field of optics in virtual reality, I wouldn't be surprised if we are in store for a lot progress and advancements on this front in the near future.